8 Machine learning architecture principles for enterprise programs in 2023

Machine learning for seasoned software professionals – Part 1

In the realm of software architecture and in software engineering in general, seasoned professionals have witnessed and navigated the ebb and flow of technological evolutions, from the monolithic structures of yesteryears to today's microservices and cloud-native solutions.

As we stand on the brink of another revolution, characterized by the ubiquity of machine learning (ML) and artificial intelligence (AI), it becomes imperative for architects to adapt and thrive in this new landscape.

This is a long article so consider saving this post.

The Goal of this ML series

This article series, titled "Machine Learning for Seasoned Software professionals," aims to bridge the chasm between conventional software architecture and the emerging domain of machine learning.

At its heart, the objective is twofold:

To demystify machine learning, distilling it into digestible principles that resonate with architectural paradigms familiar to senior architects.

To provide a roadmap, illuminating the path for these architects to integrate ML seamlessly into their existing architectural canvas, enriching it and amplifying its capabilities.

Benefits for Senior software professionals for knowing ML principles

For senior architects and professionals who've sculpted software masterpieces over the years in the IT era, this series offers an opportunity to:

Understand ML not as a siloed discipline, but as an extension of their existing knowledge.

Harness ML to solve complex problems and introduce innovative solutions.

Leverage their architectural expertise to design ML systems that are scalable, robust, and ethically sound.

Stay ahead in the competitive curve by mastering the integration of ML into broader system architectures.

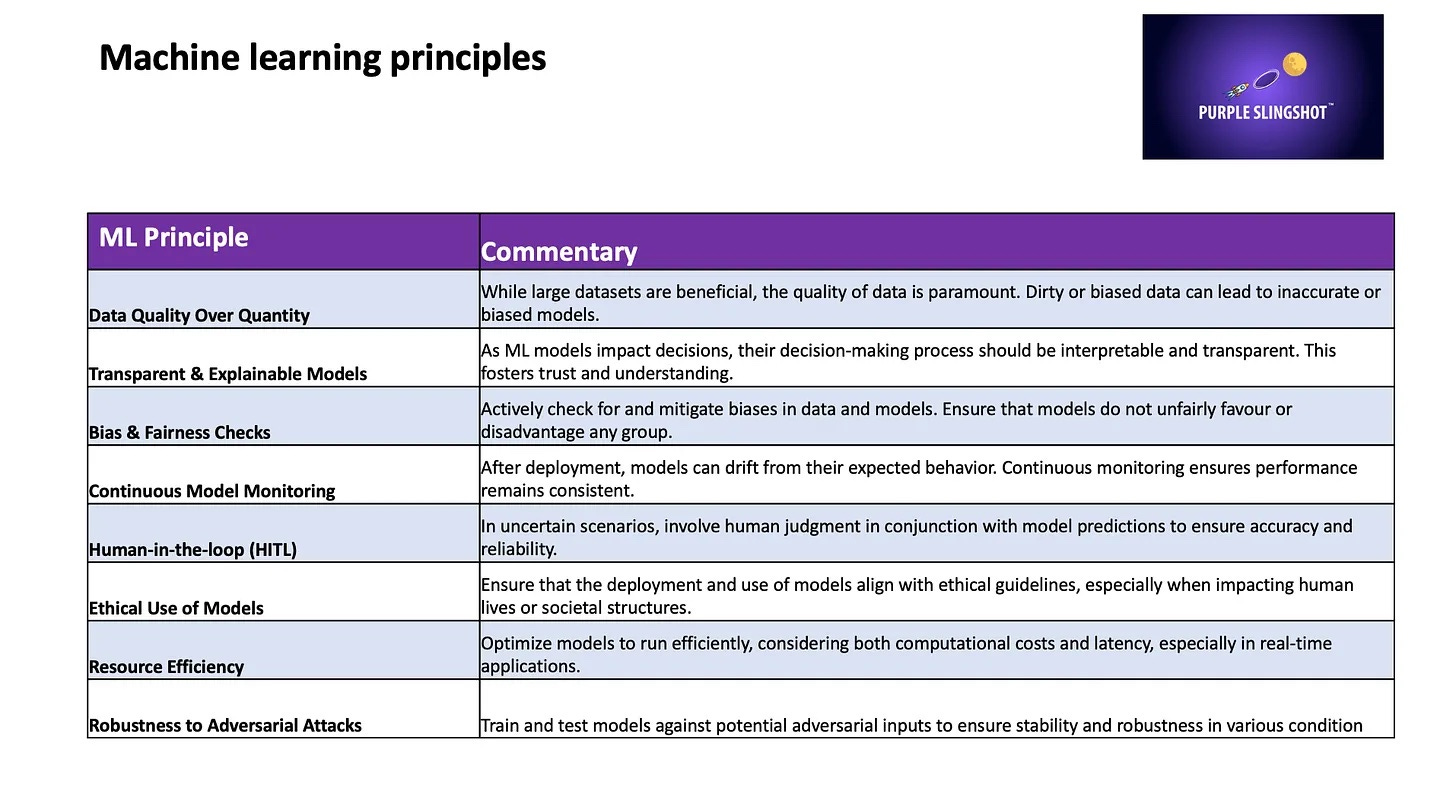

ML Principles in 2023 as I see it:

Before diving deep into the intricacies of ML algorithms or dissecting neural network layers in the upcoming series, it's crucial to lay a strong foundation based on core ML principles.

Just as the pillars of a building define its strength and aesthetic, these principles will determine the efficacy, fairness, and resilience of ML models. They will act as the North Star, guiding architects in making informed decisions throughout the ML journey.

An use case from healthcare to run our ML principles:

Consider the arena of Healthcare, currently undergoing a significant digital transformation. Hospitals and healthcare providers are aiming to predict patient health risks, optimize treatment plans, and enhance patient outcomes through ML.

Imagine a system that predicts the likelihood of a patient being readmitted within 30 days after discharge.

(Refer: link for data source )

Per internet data, Hospitals in Massachusetts lead the U.S. with an average patient readmission rate of 15.3%, closely followed by states like Florida and New Jersey.

Notably, these states, along with Rhode Island and Connecticut, are home to some of the nation's largest US hospitals. With an above-average bed count and higher patient volumes, they also experience a surge in Medicare discharges.

The older age of Medicare patients in these states often implies a prevalence of chronic conditions, co-morbidities, or post-acute care needs, factors that inherently elevate the risk of readmissions.

Such a system which predicts patient readmission within 30 days as a ML model after discharge would be invaluable, enabling timely interventions and improved patient care.

However, the design of this system embodies every principle on our list:

The data quality ensures that the predictions are based on accurate health records.

Its decisions are transparent, allowing doctors to understand and trust the model's recommendations.

It undergoes bias & fairness checks to ensure all demographic groups receive equitable care.

Continuous monitoring and regular updates ensure the model's predictions remain relevant as new data emerges.

In uncertain cases, human-in-the-loop allows doctors to make the final call, using the model merely as a guiding tool.

And most importantly, the ethical use of this model respects patient data privacy and confidentiality.

This healthcare example will be our guiding narrative, illustrating the real-world implications of each ML principle we explore.

ML principle 1– Data Quality over Data Quantity:

In the world of machine learning, the mantra "Data Quality over Data Quantity" resonates strongly.

Consider the scenario of hospital readmissions: while amassing extensive datasets on patient histories, treatments, and outcomes might seem advantageous, any inconsistencies, inaccuracies, or missing entries can jeopardize the effectiveness of predictive models.

Relying on such compromised data could result in models that produce misleading insights and guide ineffective patient care strategies.

From a technical standpoint, when machine learning systems are trained on vast but low-quality datasets, they can become prone to overfitting, where the model becomes too closely adapted to the training data and performs poorly on new, unseen data.

Additionally, noisy or inaccurate data can skew the model's understanding, leading to biased or imprecise predictions.

Thus, for institutions aiming to reduce readmissions (our usecase), it's imperative to prioritize the accuracy, relevance, and cleanliness of their data. A foundation built on high-quality data ensures the development of robust and reliable machine learning models, ultimately driving better healthcare outcomes.

ML principle 2 – Transparent & Explainable Models

"Transparent & Explainable Models" in the context of machine learning refers to the design and implementation of algorithms in such a way that their decision-making processes can be understood and interpreted by humans.

In essence, it's not just about a model making accurate predictions but also about providing clarity on how it arrived at those predictions. Such transparency ensures that stakeholders can trust the model's outputs and can also identify potential biases or inaccuracies embedded within.

Now, pivoting to the healthcare domain and the challenge of hospital readmissions, the need for transparency and explainability becomes even more evident. When a machine learning model assesses and predicts a patient's likelihood of readmission, it's crucial for medical professionals to understand the underlying reasons behind such a prediction.

If the model is a "black box," it becomes challenging to act upon its recommendations confidently.

For instance, if a patient is flagged for high readmission risk due to certain medical indicators, understanding those specific indicators can lead to tailored care plans and interventions.

Conversely, if the transparency principle is overlooked, it could result in a lack of trust in the system, potential misdiagnoses, and missed opportunities for preemptive care, ultimately affecting patient outcomes.

ML principle 3 – Bias and Fairness check

"Bias & Fairness Checks" in machine learning pertain to the systematic evaluation and mitigation of any prejudices or favouritism that may be present in the data or the model's decision-making process.

Ensuring bias-free and fair models means that predictions or decisions are made impartially, without undue weight given to certain groups or factors that could lead to unjust or unequal outcomes. This is of paramount importance to ensure that AI and machine learning systems work equitably and do not perpetuate existing prejudices.

Mapping this to the healthcare context, and more specifically to the challenge of hospital readmissions, the implications of bias can be profound.

For instance, if a predictive model inadvertently gives more weight to factors like age, ethnicity, gender or socio-economic status when determining the risk of readmission, it could lead to skewed care recommendations.

Such biases might result in certain groups receiving more aggressive interventions, while others might be overlooked. In a real-world scenario, this could mean that two patients with similar medical conditions might receive different care plans or levels of attention based solely on biased model predictions.

By ensuring rigorous bias and fairness checks, healthcare institutions can safeguard against these disparities, ensuring every patient receives equitable care based on their genuine medical needs and not on unintentional biases present in the model.

ML Principle 4- Continuous Model Monitoring

Continuous Model Monitoring" is an essential practice in machine learning that revolves around consistently observing and evaluating a deployed model's performance over time.

As the real-world data evolves and changes, there's a possibility that the model, which was initially trained on historical data, might no longer be as effective or accurate in its predictions. This divergence between the model's expected predictions and its current outputs due to changes in underlying data distributions is termed as "model drift."

In the context of healthcare and the challenge of hospital readmissions, neglecting model drift can have serious repercussions. Imagine a scenario where a model, initially trained on data from four years ago, is still in use today.

Given the advancements in medical treatments, evolving patient demographics, and other dynamic factors, the model's once-accurate predictions might now be misaligned with the current reality.

If, for instance, newer treatment methods have reduced the likelihood of readmissions for certain conditions, an outdated model might still flag patients as high-risk, leading to unnecessary interventions or prolonged hospital stays. This not only strains the healthcare system's resources but could also negatively impact patients' overall well-being.

Therefore, continuous model monitoring, especially in critical sectors like healthcare, is not just a technical necessity but a pivotal aspect of ensuring patient safety and optimal resource allocation.

By regularly checking for signs of model drift and recalibrating the model as needed, healthcare institutions can ensure that their predictive systems remain relevant, accurate, and beneficial for patient care.

In the realm of architecture, handling model drift requires a proactive and adaptive framework. This typically involves establishing automated monitoring tools that consistently track the model's performance metrics and compare predictions against real-world outcomes.

Whenever a significant deviation or degradation in performance is detected, alerts are generated to notify relevant stakeholders.

Additionally, implementing a feedback loop, where real-time data is continuously ingested and periodically used to retrain the model, can ensure that the system remains updated.

Architecturally, this means incorporating data pipelines for streamlined data collection, validation, and pre-processing, as well as automated model training and deployment mechanisms. By doing so, the architecture remains resilient to changes in the data landscape, ensuring that models are always optimized for the most recent data patterns and trends.

ML Principle 5- Human-in-the-loop (HITL)

Human-in-the-loop (HITL)" is a machine learning paradigm where human judgment collaborates with algorithmic processing to achieve superior results.

Instead of relying purely on automated systems, HITL acknowledges that there are scenarios where human expertise and intuition can provide invaluable guidance, especially in instances where the data is ambiguous, incomplete, or outside the model's training experience.

By incorporating human feedback directly into the learning process, models can be refined, errors can be rectified, and the overall system becomes more adaptable and effective.

In a healthcare context, such as predicting hospital readmissions, HITL plays a critical role. While machine learning models can analyze vast datasets and identify patterns that might be imperceptible to humans, there are nuances in patient care and clinical judgment that may not be easily captured by algorithms.

For instance, when a model flags a patient as high-risk for readmission, a senior clinician could review the case, considering not just the data but also their clinical experience and the patient's unique circumstances.

This feedback can then be looped back into the system, helping refine the model's predictions over time. By ensuring a collaborative decision-making process, HITL architectures combine the strengths of both machine-driven insights and human expertise, driving better patient outcomes and fostering continuous model improvement.

ML Principle 6- Ethical Use of Models

"Ethical Use of Models" emphasizes the importance of ensuring that machine learning models are used responsibly, fairly, and transparently. As machine learning systems increasingly influence various aspects of our lives, it's paramount to ensure that these models do not inadvertently perpetuate biases, misrepresent certain groups, or make decisions that could be deemed unethical.

Ethical considerations should encompass fairness, accountability, transparency, and the potential societal impacts of the decisions made by the model. The goal is to harness the power of machine learning while safeguarding individual rights and ensuring that models serve the broader good.

Within the healthcare sector, the ethical use of models takes on an added layer of significance due to the direct implications on patient health and well-being.

Consider the case of hospital readmissions again.

If a model were trained on biased data, it could, for instance, unjustly categorize certain demographic groups as high-risk for readmission, leading to unnecessary interventions or overlooking others who might genuinely be at risk.

Such biases could arise from historical data that reflects past prejudices or systemic inequities. Ensuring the ethical use of models means constantly vetting and validating data sources, understanding the origins of any biases, and rectifying them before deployment.

Furthermore, the stakeholders, including clinicians and patients, should be informed about how decisions are made by the model, emphasizing transparency (Talked in Principle 2) and trust. As healthcare is inherently tied to life-altering decisions, the ethical use and oversight of predictive models are not just technical imperatives but moral obligations.

ML Principle 7- Resource Efficiency and sustainability

In machine learning architecture, "resources" typically refer to computational power, memory, storage, and the network bandwidth necessary to train, validate, and deploy models. These resources can significantly influence the performance, speed, and scalability of ML systems. Ensuring efficient and sustainable use of these resources is paramount to the cost-effectiveness and feasibility of deploying ML solutions at scale.

With the growing prominence of technology in every sector, sustainability initiatives have become paramount. Overly complex machine learning models, which demand significant power and produce high carbon emissions, can unintentionally derail an organization's broader sustainability objectives.

Consider our healthcare use case of predicting hospital readmissions. A complex deep learning model might offer marginally better accuracy but could demand significantly more computational power and memory for training and inference.

If deployed in real-time clinical environments, such models can introduce unacceptable latencies, hindering swift decision-making. Moreover, the cost associated with running these resource-intensive models can overshadow the marginal benefits they bring in terms of prediction accuracy.

Simply put, it might not be financially justifiable for a hospital to invest in top-tier hardware infrastructure just to support an overly complex model, especially when slightly simpler models could deliver nearly equivalent accuracy at a fraction of the resource cost.

Cloud providers like Azure and AWS have recognized these challenges and offer services tailored for efficient machine learning operations. Both platforms provide managed ML services—Azure Machine Learning and Amazon SageMaker—that allow developers to choose the right compute instances based on the complexity and demands of their models.

Moreover, these platforms leverage hardware optimizations, such as GPU and FPGA integrations, to accelerate model training and inference without escalating costs. Auto-scaling features ensure that resources are dynamically allocated based on demand, eliminating wastage of computational power during low-demand periods.

ML Principle 8- Robustness to Adversarial attacks

In the realm of machine learning, robustness denotes the ability of a model to maintain its performance even when it encounters inputs that are deliberately crafted to deceive or mislead it. These malicious inputs are termed "adversarial examples," and the attempts to deceive the model are known as adversarial attacks. Some common adversarial attacks include DeepFool, Boundary attack, FGSM etc.

Essentially, an adversarial attack perturbs the input data slightly, often imperceptibly to humans, aiming to cause the model to make incorrect predictions or classifications. Ensuring that machine learning models can resist or recover from such attacks is crucial, especially in critical applications like healthcare, finance, or security.

Revisiting our healthcare example, imagine a system that predicts the likelihood of hospital readmission. An adversarial attack on this system might involve making slight changes to patient data entries to maliciously manipulate the predictions of the model.

This could be done for a variety of reasons—perhaps to falsely lower readmission risks for certain patients or to intentionally strain hospital resources by exaggerating risks. If the model is not robust to these attacks, the consequences can be severe, leading to inappropriate patient care, misallocation of resources, or erroneous health outcomes.

While the theoretical threat of adversarial attacks has been known for some time, their practical implications in real-world scenarios have become more pronounced with the increasing deployment of ML systems. This has led to the development of a subfield dedicated to understanding these attacks and devising defenses against them.

Additionally, platforms are beginning to offer runtime monitoring to detect unusual input patterns, which could be indicative of an adversarial attack, and respond appropriately.

Next steps and conclusion:

The world of machine learning is vast, dynamic, and ever-evolving. As we integrate these models more deeply into critical enterprise infrastructures, understanding and adhering to key principles like data quality, transparency, fairness, continuous monitoring, human-in-the-loop interactions, ethical considerations, resource efficiency, and robustness becomes paramount.

Our exploration, particularly using the lens of healthcare and the example of hospital readmissions, underscores the profound implications of these principles on the outcomes, costs, and overall efficacy of ML deployments.

While this series has endeavoured to provide a comprehensive overview for seasoned software architects transitioning to or engaging more deeply with machine learning, the field's dynamism means that there's always more to learn, adapt, and refine. Therefore, your insights, feedback, and shared experiences are invaluable.

This is a newsletter so you can share it.

It's through collaborative discussions and knowledge sharing that we can ensure the content remains current, relevant, and beneficial for all.